Introduce SUSE Enterprise Storage 6 (part 1)

Having a training request on SUSE Enterprise Storage 6 (SES6) may as well prepare it online so everyone can reference it in the future. It is a four training session, so it is not a hands-on cluster operation workshop, more like a knowledge transfer and gets ready for asking more difficult questions next time.

Here is the outline:

Introduction:

Pool, Data and Placement:

CLI — Don’t crash the cluster in day 1:

Troubleshooting in day 2:

Introduction

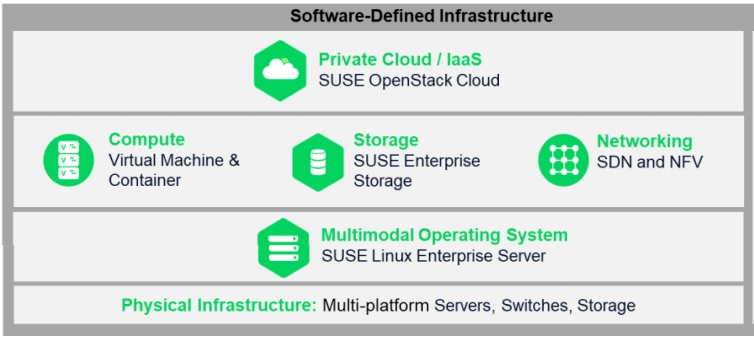

Software Defined Infrastructure (SDI) is everywhere. What is it?

- Software Defined Compute (SDC),

- Software Defined Network (SDN),

- Software Defined Storage (SDS),

Abstraction of hardware provides flexibility and elasticity. It doesn’t matter what kind of CPU you are running on x86 or ARM. The total sum of network and computing stack up together, automated, programmatically provide a workforce for any applications needed.

SUSE Enterprise Storage 6 is based on ceph version, Nautilus

A distributed clustered storage system. It is well known as native Cinder block support for images and volumes for OpenStack. When compared to traditional storage it is really scalable, reliable and self-managed up to petabytes in size. Another major benefit is it could run on basically any commodity hardware. Adding more storage nodes into the cluster and increase the capacity is very simple. No need to rebuild storage array and data will be automatically redistributed. No single point of failure, no node in a cluster stores important information alone and highly configurable.

Software Defined Storage (SDS), how should we define SDS?

SDS uses software and commodity hardware to handle all the data storage logic and manage it. It is much faster to adopt market needs when expanding storage capacity. Abstracting dedicated hardware storage to SDS allow us to scale faster and lower cost in terms of capital and operation expenditure as well. A flexible and robust way of delivering storage for applications.

Ceph is not abbreviated but a short form of cephalopod, an octopus like sea creature. As you may also notice all the ceph release is created with alphabetical order and lots of sea creatures as well.

Argonaut, Bobtail, Cuttlefish, Dumpling, Emperor, Firefly, Giant, Hammer, Infernalis, Jewel, Kraken, Luminous, Mimic, Nautilus.

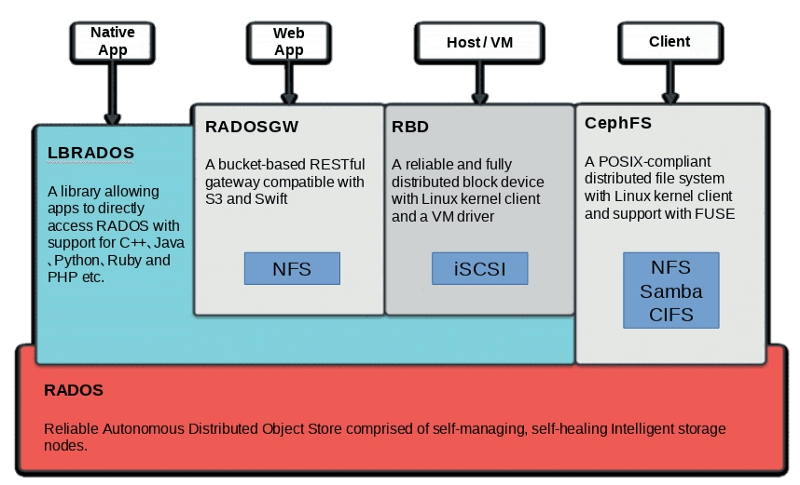

RADOS is an abbreviation, Reliable Autonomous Distributed Object Store

As the name suggested, RADOS is the backend object storage service supporting the foundation storage data management in ceph. It allows us to use commodity hardware to provide reliable, extensible, scalable object storage over thousands of storage devices. Intelligent self manage storage management provide replication, failure detection, and recovery in real-time.

Scaling out vs Scaling up

Scaling out in SDS means adding more nodes or storage resources into the cluster network. There is basically no hard limitation, and every node in the cluster provides more fault tolerance and capacity at the same time. Since hardware is not specific therefore the price is also much cheaper compared to dedicated hardware storage unless you are comparing to consumer NAS. But adding close to the linear performance and capacity improve with auto management which is not something NAS can daisy-chain out a solution can match.

Scaling up is what traditional storage do, by using high-performance storage hardware dedicated to providing data storage for enterprise applications. This kind of system design provides a finite amount of storage space which could be difficult to expend and very expensive to acquire. Meanwhile, the fault tolerance level may not improve by doing so. All applications share the same cost per byte.

SES Architecture

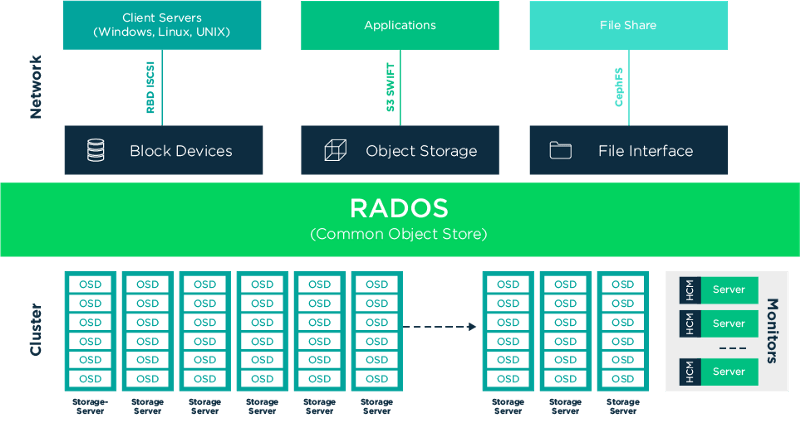

Block Device, Object Storage and File System Interface are the three available interface different clients and applications can be utilized to gain access to the ceph storage.

Object Storage Daemon, Monitor Servers, and Manager Daemons

- Object Storage Daemon (OSD) manage physical disks

‒ Normally one disk per one OSD process

‒ Take care of data store, replication, balancing data and recovery

‒ Reporting statistic and status back to MON - Monitor Servers (MON) manages the overall cluster status and maps

‒ Normally starting with 3 nodes in a cluster, then adding multiplication of 2 in every extension in order to prevent split-brain to obtain a quorum.

‒ Handle MON map, MGR Map, OSD map, PG map, and CRUSH map

‒ Epoch is the history of state changes in the cluster also maintained by it. - Manager Daemon (MGR) monitoring statistic data and admin interface

‒ Keeping track of the cluster runtime statistical data and metrics

‒ Provides external access to cluster object-store metadata and events

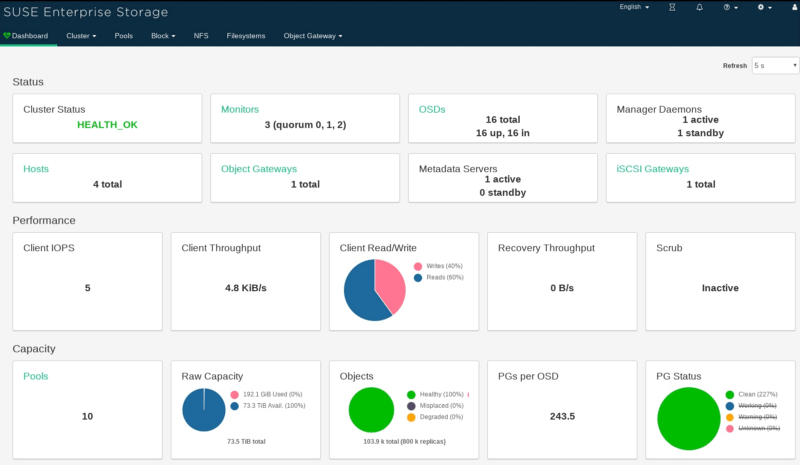

Administration and Monitoring

Python Command Line Interface (CLI) is most likely the tool you are using to interact with the cluster. Admin node doesn’t need to be dedicated but having a sync clock with the cluster could be tricky if you are plugging a laptop in and out of the cluster network. Meanwhile, cluster security is another concern if a pluggable laptop running around with cluster private key inside.

SES provides a Salt base life-cycle management tool as well called DeepSea, so a master node is also required to use it. All the clusters node will be needed to be set up as the minion and responded back to the master.

Ceph Manager comes with a dashboard to report all the cluster metrics, meanwhile, it utilized Prometheus and Grafana for time base data store and graphing tools to provide visual infographics on the web-based dashboard.

How do we use the store? I mean object? or block? or file

From my personal experience, there are three types of users using ceph storage: Developers, Administrators, and End-Users.

- Developers : You will be most likely using

‒ RADOS gateway (RGW) which is S3 or Swift compatible APIs or

‒ The Native protocols over library librados which could be any possible languages but most likely python, C, C++ - Administrator : You will be most likely using

‒ RADOS Block Device (RBD) for the physical or the virtualized host OS

‒ iSCSI Gateway for exporting RBD images as iSCSI disk in the network - End-User: You will be most likely using

‒ CephFS a traditional like filesystem with POXIS compatible features.

‒ NFS Ganesha providing access to RGW buckets objects or CephFS.

‒ Samba (SMB/CIFS) providing access to CephFS from Windows / MacOS

This is the introduction of SES6 let me know if you find this useful or have any questions/bugs. Thanks for reading. If you want to support me keep writing this please clap, like and share.

喜欢我的作品吗?别忘了给予支持与赞赏,让我知道在创作的路上有你陪伴,一起延续这份热忱!

- 来自作者

- 相关推荐